Data collection and storage is the process of moving, preparing, and arranging data collected from its source and placing it in a location that is accessible to the next step in the decision process – analytics.

The energy crisis of the late 1970s and the 1980s fueled the evolution of centralized data collection in manufacturing shop operations. Up to that point, there was little integration between different pieces of equipment, and information from these equipment was generally not collected or collected for limited business purposes. The initial demand for data collection focused on the energy consumed by each piece of equipment and the utilization of that equipment.

In support of this demand, various communications technologies and equipment monitoring software applications appeared on the manufacturing shop floor. From then on, these two technologies have worked together to drive innovation on the manufacturing shop floor.

As data became available, new software applications evolved – moving from basic equipment monitoring to assessing equipment utilization and performance and then to production scheduling and parts routing and tracking. These new software applications drove the demand for exponentially more data, further driving new evolutions in communication technologies.

As these technologies evolved, divergent approaches for assessing and managing data emerged. These divergent approaches represent both opportunities and challenges for business managers. The single largest challenge created by these divergent technologies is determining who determines which data is collected, how often that data is collected, and if and in what form that data may be available for other uses.

Early in the evolution of shop floor software applications, each application had its own integral data collection process for collecting data needed for its analytics. And those analytics tended to be focused on a specific application topic – alarm monitoring, energy consumption, OEE, etc. These types of applications are still very common today. While very efficient for their intended use, they represent some challenges – (1) multiple separate applications are required to address an array of information requirements on the shop floor, (2) each application competes for network resources to access data since data is not shared amongst these applications, and (3) information generated from different applications may have the same names but represent quite different meanings.

To address these challenges, some software applications have expanded horizontally – addressing a wider variety of analytics in a single application. These applications have the advantage that they are more efficient in data collection and have less impact on network resources. However, these applications leave a company highly dependent upon a single vendor, where the software supplier dictates the data collection rate and the resulting analytics for each type of data collected. An emerging issue with these types of applications is "Who owns the data – the company or the software/analytics supplier?”

Today, two system architectures have evolved to address many of the challenges of the past.

Companies have much more control over what data is collected and how often that data is collected.

Data is easily shared amongst multiple applications.

Data is fully characterized (named, scaled, etc.) to have the same meaning and context when used by each set of analytics.

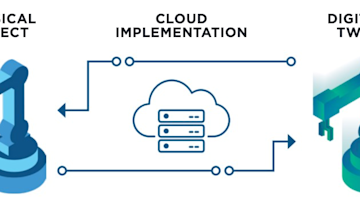

The first architecture is based on implementing a single data collection application that collects, characterizes, and stores data in a structured database. Individual software applications then access that data to perform their analytics. In this case, the company, not the software application providers, has total control of the data collection process and establishes the meaning of each piece of data. Data is collected a single time and can be used by various analytic tools. This system architecture is very extensible, allowing the addition of new analytic tools at any time without impacting existing implementations.

The second architecture involves new network technologies that aid in the management and distribution of data. Generally, these network implementations are referred to as pub/sub (publish/subscribe) network architectures. The initial data collection is performed within the network structure in these implementations. The data is also characterized at the network level. Data is then made available through one or more access points. Individual applications access the data from these access points. An additional feature of this architecture is that an application can subscribe to a specific set of data, and the network will provide (publish) new data whenever it becomes available – eliminating the need for the application to poll to determine if data has changed constantly. One of the main differences in this implementation is that a separate data collection and data storage function is no longer required unless needed for a specific purpose.

Data collection architectures that are more modern have many advantages. However, there is one issue that companies need to consider for every data collection implementation. The cost of data storage has dropped significantly, resulting in some deployment habits with longer-term challenges. Instead of strategically deciding on the data to be collected and stored, the easy solution is to "Collect everything, and then we know we have whatever we may ever need in the future."

This concept of mass data collection leads to two long-term problems:

Traditional database systems are not well suited for such large volumes of data. Newer technologies like "data lakes" (large data repositories) and cloud data storage are required to manage the collected data volume.

Finding and separating the essential data for a specific decision process becomes challenging – the proverbial "needle in the haystack."

Well-structured systems are designed to collect the data (small or large quantities) important to the decision processes applicable to each business situation. The key is the quality of the data, not the volume.

You can learn more about deploying a modular digital manufacturing information system in AMT’s “Digital Manufacturing” white paper series: “Overview Of Digital Manufacturing: Data-Enabled Decision-Making.”